More than 90 per cent of total data was generated in the last two years. Of this, text data has a large chunk. Just think about how much you type each day. Ironically, I was thinking about it while writing this blog. And more than chunks of characters, there is information hidden in the text which can be extremely insightful if harnessed well. Consequently, data scientists started using this data for countless applications. Thus was born the field of text mining or text analytics. In this blog, we discuss 5 applications of text analytics.

Document retrieval

Suppose you have a cluster of documents (news articles for example). A single document can have information about many topics. For example, an article talking about a legal investigation of a pharmaceutical company will have contents related to topics like Government, Politics, Medicine, etc. What if we want to retrieve articles from this cluster of documents that talk about particular topics. Imagine a customer service centre where you have a team of individuals emailing to customer queries with relevant articles. If the queries by the customers can be clustered according to the type of problem, every query in a single clustered can be answered by sending them a single article. The entire process can largely be automated.

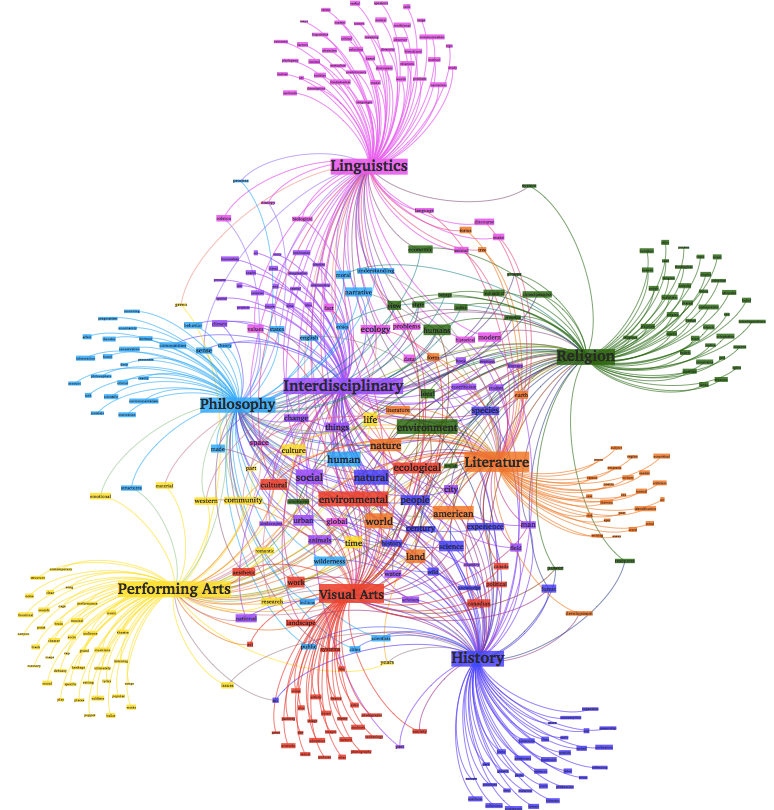

Methods like topic modelling help you do this. The number of topics or ‘the clusters’ is decided by us. The model works by associating words and documents with these topics. Basically, they calculate the probability of the words and documents belonging to a particular topic. For a detailed explanation, you can view the following blog that explains topic modelling using an algorithm named PLSA (Probabilistic Latent Semantic Analysis) along with implementation-

https://towardsdatascience.com/topic-modelling-with-plsa-728b92043f41

Spam Filtering

No one has ever enjoyed reading advertisements. And what could be worse than having them beep in your email inboxes? This is where spam filtering saves you. It helps keep the prince of Nigeria asking for money at bay. So how do we do it in reality? Consider you have large amounts of data labelled spam or not spam. And you have the text content in those emails. What follows is looking at words that are frequent in a spam email. The probability that the email is spam given those words in the email text is very high. These probabilities are calculated using an algorithm known as Naive Bayes.

The metric here is optimizing false positives (spams) without sacrificing important non-spam emails. This algorithm is very simple in its implementation yet extremely effective and has been in use since 1990s.

Sentiment Analysis

Everyone has heard of the recent scandal by a company named Cambridge Analytica. Allegedly, it illegally used Facebook data of millions of American users to hamper the content they consumed in order to change their political opinion. Christopher Wylie, the whistleblower has explained how the firm used the Facebook data to understand the opinions or, the sentiment of the masses and then created microtargeted content to psychologically change the perception of people. Although this is completely unethical, we can see how powerful analytics can be.

Sentiment analysis is basically understanding the emotional component behind peoples opinion. People express their opinion in many ways, one of them being in the form of posts, comments. Essentially, in the form of text. Consider a product on Amazon with 1000s of reviews. Reading each one of these reviews is manually impossible. We want an algorithm to help us understand the sentiment of these reviews as to know if the product is doing well or not. This is where sentiment analysis comes into picture.

Although this is an amazing method, it has some limitations. Human language is complex. Consider the following sentences –

I want that burger so bad

I ate the burger. It was bad

It is highly likely that an algorithm would label both of them as negative sentiment because of the word bad. But we know that the first one is, in fact, an extremely positive review.

Human Resources

With hundreds of job portals, a job gets numerous applications. It becomes difficult for recruiters and human resource professionals to manually screen each resume and select a few. There are startups that provide software as services to make recruiters life easier. These softwares can scan the resume, look out for desired skills in the form of keywords and shortlist relevant and good candidates from the pile.

Chatbots

This is a no brainer, isn’t it? Chatbots are revolutionizing the way information is consumed. Almost every website has started deploying its own chatbot. And why not! It saves you a ton of time. Websites can have a lot of information. Sometimes, it can be hard to find the particular piece you are looking for. A well-trained chatbot can answer your questions in a jiffy. This can not only save the visitor time but also company lot of money it would have spent otherwise on installing customer call services.

Chatbot can be built in many simple to complex ways. But an important part of it is information retrieval by scrapping the website and structuring an answer.

In addition, you might have observed the word prediction Google or any other keyboard does. Google learns the pattern of your typing, the common phrases you use and then predicts those the nest time it feels that you might need it. This is called language modelling.

I hope you get the idea of how text can be used in Data Science. Now that you have some high-level idea of it, you shouldn’t wait to get started and get your hands dirty.

To get started with text check out the following blogs –