medium.com

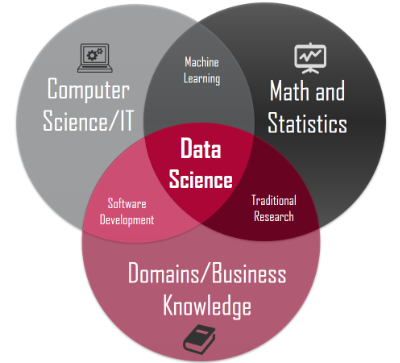

Data Scientist is regarded as the sexiest job of the 21st century. It is a high paying lucrative jobs which comes with a lot of responsibility and commitment. Any professional needs to master state-of-the-art skills and technologies to become a Data Scientist in the modern world. It is a profession where people from different disciplines could fit in as there are a plethora of specialties embedded in a Data Scientist role.

Data Science is not a present-day phenomenon, however. The various skills like Machine Learning, Deep Learning, AI, and so on were there since the advent of computers. The recent hype is a result of the large volumes of data that are getting generated and the massive computation power that modern-day system possess. This has led to the rise of the concept of Big Data which we would cover later in this blog.

Now, the Data Scientist job may look like a Gold to outside but it certainly has its struggles and is not without major challenges. The dynamism of the role requires one to be a master of several skills which becomes overwhelming at times. In this article, we would cover the major challenges faced by a Data Scientist and how you could overcome it.

1. Misconception About the Role –

In big corporations, a Data Scientist is regarded as a jack of all trades who is assigned with the task of getting the data, building the model, and making right business decisions which is a big ask for any individual. In a Data Science team, the role should be split among different individuals such as Data Engineering, Data Visualizations. Predictive Analytics, model building, and so on.

The organization should be clear about their requirement and specialize the task the Data Scientist needs to perform without putting unrealistic expectations on the individual. Though a Data Scientist possesses the majority of the necessary skills, distributing the task would ensure flawless operation of the business. Thus a clear description and communication about the role are necessary before anyone starts working as a Data Scientist in the company.

2. Understanding the Right Metric and The KPI –

Due to the lack of understanding among the majority of stakeholders about the role of Data Scientist, they are expected to wave a magic wand and solve every business problem in a hitch which is never the case. Every business should have the right metric which goes in sync with its objectives. The metric would be parameters to evaluate the performance of the predictive model while the Key Performance Indicators which let the business work on the areas to improve.

A Data Scientist could build a model and gets high accuracy only to realize that the metric used doesn’t help the business at all. Each and every company has different parameters or metrics to identify their performance and thus defining one with clarity before starting any Data Science work is the key. The metrics and the KPIs should be identified and laid out and communicated to the Data Scientist who would then work accordingly.

3. Lack of Domain Knowledge –

This challenge is more applicable to a beginner Data Scientist in an organization than who has more years of experience working as a Data Scientist in the same organization. Someone who is just starting or is a fresh graduate has all the statistical skills and techniques to play with the data but without the right domain understanding, it is difficult to get the right results. A person with a particular domain knowledge who know what works and what doesn’t which is not the cause for a newbie.

Though domain expertise doesn’t come overnight and it takes time spending and working in a particular domain, one could, however, take up datasets across various domains and try to apply their Data Science skills to solve the problem. In doing so, the person would get accustomed to the data across various domains and get an idea about the variables or features that generally used.

4. Setting up the Data Pipeline –

In the modern world, we don’t deal with megabytes of data anymore, instead of with deal with terabytes of unstructured data generated from a multitude of sources. This data is voluminous in nature and traditional systems are incapable of handling such quantity. Hence the concept of Hadoop or Spark came into the picture which stores data in parallel clusters and processes it.

Thus for batch or real-time data processing, it is necessary that the data pipeline is properly set up beforehand to allow the continuous flow of data from external sources to the big data ecosystem which would then enable Data Scientists to use the data and process it further.

5. Getting the Right Data –

Quality is better than quantity is the call of the hour in this case. A Data Scientist role involves understanding the question asked and answer the question by analyzing the data using the right tools and techniques. Now, once the requirement is clear, it’s time to get the right data. There is no shortage of data in the present analytical Eco space but having enough data without much relevance would lead to a model failing to solve the actual business problem.

Thus to build an accurate model which works well with the business it is necessary to get the right data with the most meaningful features at the first instance. To overcome this data issue, the Data Scientist would need to communicate with the business to get enough data and then use domain understanding to get rid of the irrelevant features. This is a backward elimination process but one which often comes handy in most occasions.

6. Proper Data Processing –

A Data Scientist spends most of the time pre-processing the data to make it ideal for building a model. It’s often a hectic task which includes cleaning the data, removing outliers, encoding the variables and so on. Unlike in hackathons or boot camps, the real-life data is generally pretty unclean and it requires a lot of data wrangling using different techniques.

The drawback is that if the model is built on dirty data it would behave strangely when tested on an unknown set of data. Suppose the data has a lot of outliers or noise which are not removed and you train a model with that data, then the model would learn by heart all the unnecessary patterns in the data resulting in high variance. This high variance would cause the model to not generalize well and perform poorly on the new data set. No wonder, Data Scientists spend eighty percent of their time only cleaning the data and making it ready.

To overcome this data pre-processing issue, a Data Scientist should put all the effort in identifying all possible anomalies that could be present in the data and come up with solutions to get rid of those. Once that is done, the model would be trained on cleaned data which would allow it to generalize well to the patterns and get good performance of the test data.

7. Choosing the Right Algorithm –

This a subjective challenge as there is no such algorithm which works best on a dataset. If there is a linear relationship between the feature and the target variables, one generally chooses the linear models such as Linear Regression, Logistic Regression while for non-linear relationship the tree based models like Decision Tree, Random Forest, Gradient Boosting, etc., works better. Hence it is suggested to try different models on a dataset and evaluate based on the metric given. Once which minimizes the mean squared error or has a greater ROC curve is eventually considered to be the go-to model. Moreover the ensemble models i.e., the combination of different algorithms together generally provides better results.

8. Communication of the Results –

Managers or Stakeholders of a company are often ignorant of the tools and the working structure of the models. They are required to make key business decisions based on what they see in front of charts or graphs or the results communicated by a Data Scientist. Communicating the results in technical terms would not help much as people at the helm would struggle to decide what’s being said. Thus one explain in layman terms their findings and even use the metric and the KPIs finalized at the start to present their findings. This would entail the business to evaluate their performance and conclude on what key grounds improvements has to be done for the growth of the business.

9. Data Security –

Data Security is a major challenge in today’s world. The plethora of data sources which are interconnected has made it susceptible to attacks from the hackers. Thus the Data Scientist are struggling to get consent to use the data because of the lack of certainty and the vulnerability that clouds it. Following the global data protection is one way to ensure data security. Use of cloud platforms or additional security checks could also be implemented. Additionally, Machine Learning could be also used to protect against cyber-crimes or fraudulent behaviors.

Conclusion

Despite all the challenges faced, Data Scientist is the most in-demand role in the market and one should not let the challenges be a hindrance to achieve their goal.

As several professionals are trying to enter this field, it is necessary that they learn to programme first, and Python is an ideal language to start off their programming journey.

Dimensionless has several blogs and training to get started with R, and Data Science in general.

Follow this link, if you are looking to learn Data Science Course Online!

Additionally, if you are having an interest in learning Data Science, Learn online Data Science Course to boost your career in Data Science.

Furthermore, if you want to read more about data science, read our Data Science blogs